The information age is the period in which we live. Every day, new technologies are being developed to make life easier, more sophisticated, and better for everyone. Today, technology is developing at an almost exponential rate. New technology assists businesses in lowering costs, improving customer experiences, and boosting profitability.

By 2030, around 50 billion devices will be connected to the internet. This objective is closer than expected, thanks to the COVID-19 epidemic accelerating the company’s digital transformation initiatives. Understanding the most recent IT innovations is essential for developing your career and discovering new prospects.

There are always new trends because the technology sector is dynamic and constantly evolving. It might be challenging to stay current with recent breakthroughs and advances. It is crucial to keep up with new developments to choose which technologies to invest in and how to implement them.

While other technology advances will be more subtly disruptive, some will significantly impact how we live and work. All people today need to be aware of the top 9 technological trends for 2022.

Pros of Technology

1. Saves time:

Time savings is technology’s most significant benefit. As we are prepared to do a task faster, we will use the time saved for other crucial tasks. Numerous tasks, including cooking, cleaning, working, and commuting, are completed more quickly thanks to technology.

2. Increased interaction:

In the past, it was challenging to communicate with someone who belonged to a particular region of the earth. Recognize it! Remember when communication required sending pigeons or letters, which took days or weeks? But thanks to technology, the world has become smaller, and it is now simple to communicate with someone located somewhere on the planet.

3. We can easily pass our time:

Technology has made it simple for us to pass the time. Everything is available to us quickly. We can access everything instantly by using the internet to view countless movies, play games like Clash of Clans, check any newspaper, any website with an essential article, etc.

4. Enhanced educational techniques as a result of technology:

Technology choices include software, tools, and even pencils that make it simpler to learn new abilities. Numerous tools can be incorporated into the modern classroom to simplify teaching. Even a calculator might not have been a standard tool back then. With what we acknowledge to be fundamental technological advancements, we have access to more information than prior generations did for most of their lifetimes.

Cons of Technology:

1. Reduce Jobs:

Technology has made many tasks ten times more efficient, which is frequently the justification for when it might be ready to take the place of people. The development of technology has made it possible for machines and technology to perform jobs that formerly required human labor—resulting in a lack of work.

Businesses today favor technology that can complete jobs faster and more accurately than people. As a result, as technology advances, humans are increasingly being replaced by robots and algorithms.

2. It creates dependencies:

Our reliance on our tools, processes, and technology has grown due to technology’s development in the modern world. We don’t need to ponder or remember anything because everything is immediately available to us, thanks to a massive database. Even a little instrument, akin to a calculator, can decrease the need for mental calculations or mathematical skills because equations can be solved by entering them into the gadget.

There is frequently a greater risk of unemployment in particular industries because of these dependencies, which result in a reduction in human capital. We may even exchange all of the humans using a machine akin to a restaurant’s self-service touch screen.

3. Human effort is decreased by technology:

We need to work smarter, not harder, according to a saying. Most technical advancements aim to reduce the effort we need to put in to get a result. The implication is clear: We can outsource work to machines. When there is less work for humans, it indicates that humanity is gradually becoming extinct. Because automated procedures render some jobs obsolete, programming, coding, and related support services will become the new employment sectors.

4. Privacy problem:

Privacy may be a significant concern as we integrate technology into our daily lives. Private data and information must be secure. Numerous technologies and apps guarantee privacy, but are they sufficient to protect users from potential privacy risks?

We shall conclude by saying that technology has benefits and drawbacks. The choice of whether the good outweighs the harm is with the users. The most crucial factor is how we will advance technology.

List of Top 10 Best And New Technology Trends In 2022:

Technology can completely transform and reimagine our business in a connected environment. We’re hopeful that the newest technological advancements can help us handle some of our most pressing business concerns and create a more egalitarian, resilient society as we prepare for a post-pandemic future.

Here are the top 10 tech trends that, in our opinion, have the potential to influence the upcoming year, as determined by our industry insights and discussions with customers and colleagues:

1. Smarter Devices:

Our world is now more intelligent and well-functioning, thanks to artificial intelligence. It goes above and beyond mere human simulation to make our lives easier and more convenient. As data scientists develop AI household robots, appliances, work devices, wearables, and much more, these more innovative products will be around well into the future, possibly even beyond 2022.

To make our work lives more manageable, sophisticated software programs are almost always required. As more businesses transition to digital environments, more ingenious gadgets are another high-demand addition to the IT sector. Success in almost every higher-level position necessitates strong IT and automation skills. For this reason, Simplilearn’s RPA course may assist you in mastering these abilities so that you can flourish in your job, whether in management, marketing, or IT. The most OK jobs you can pursue are listed below:

- IT Director

- Scientists in data

- Testers of goods

- Product Directors

- Engineers in automation

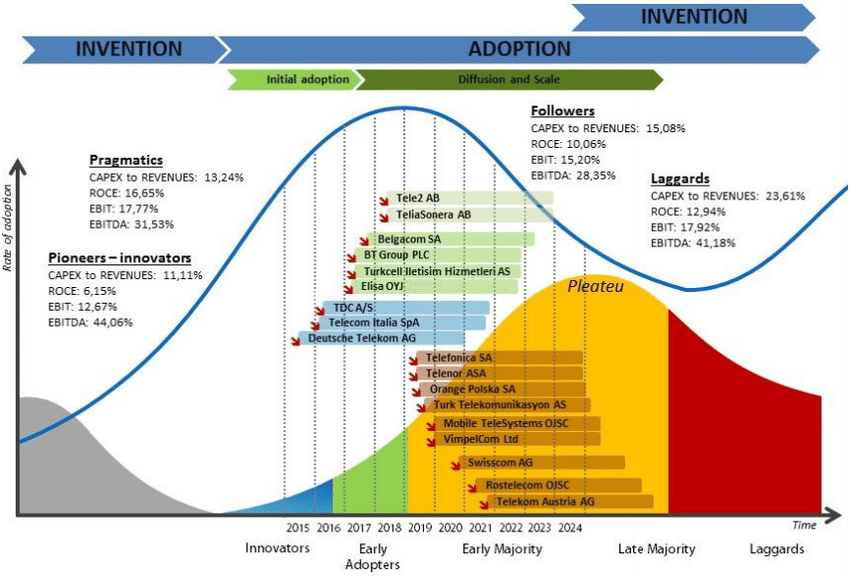

2. 5G technology adoption:

This year, there will be more than one billion subscribers to 5G technology, according to Ericsson’s Mobility Report(opens in new tab), published by Statista Research.

While seamless video streaming was one of the significant advancements made by 4G, 5G will have 100 times the speed, making uploads, downloads, data transfers, and broadcasts considerably faster.

The Internet of Things (IoT), which comprises internet-connected smart gadgets joining and communicating with one another, will benefit from 5G. In contrast to 4G, numerous devices can join the 5G network without experiencing a significant decrease in speed, latency, or dependability. This is due to the network-slicing technology, which establishes separate networks with various services for each device.

Additionally, 5G mobile networks can transmit their radio waves to as many as one million devices per square kilometer, but 4G mobile networks struggle to maintain connectivity in congested areas.

3. Cybersecurity:

Since the invention of computers, cybersecurity has significantly contributed to a safer user experience. Although it is not a new trend, cybersecurity measures must be continuously enhanced and improved because technology is developing quickly. Threats and hacking attempts are increasing in quantity and ferocity, necessitating upgrading security mechanisms and defenses against harmful attacks.

Hackers continuously attempt to steal data or information because it is currently the most valuable asset. Because of this, cybersecurity will always be a popular technology and require ongoing improvement to keep up with hackers. Today, the need for cybersecurity experts is increasing three times more quickly than any other computer job demand. Businesses will spend roughly $6 trillion on cybersecurity by 2022 as they increasingly recognize its importance.

Cyber professionals are ethical hackers, security engineers, and chief security officers. Due to its importance in delivering a secure user experience, the remuneration is much higher than in other technical job roles.

4. Artificial Intelligence and Machine Learning:

Now, businesses and researchers are utilizing their data and computing capacity to offer the world superior AI capabilities.

Machine vision is one of the major trends in AI. Computers can now see and identify objects in a picture or video. Significant strides are also being made in language processing, allowing machines to recognize human voices and communicate back to us.

This year, low- or no-code will also be a prevalent trend. We will be able to create unique applications without being constrained by our coding abilities by building our AI utilizing drag-and-drop graphical user interfaces.

5. Full Stack Development:

Full-stack development is the newest technology trend in the software business that is picking up steam. It is getting more critical as the IoT grows. Full-stack development includes a website or application’s front and back end.

The goal of businesses is to create applications that are both comprehensive and easy to use. This calls for a thorough understanding of both server-side programming and web development. You will always need website builders if you have the necessary expertise.

Keeping up with the most recent web development trends is critical if you’re considering a career. To start, there are several online courses for web development available.

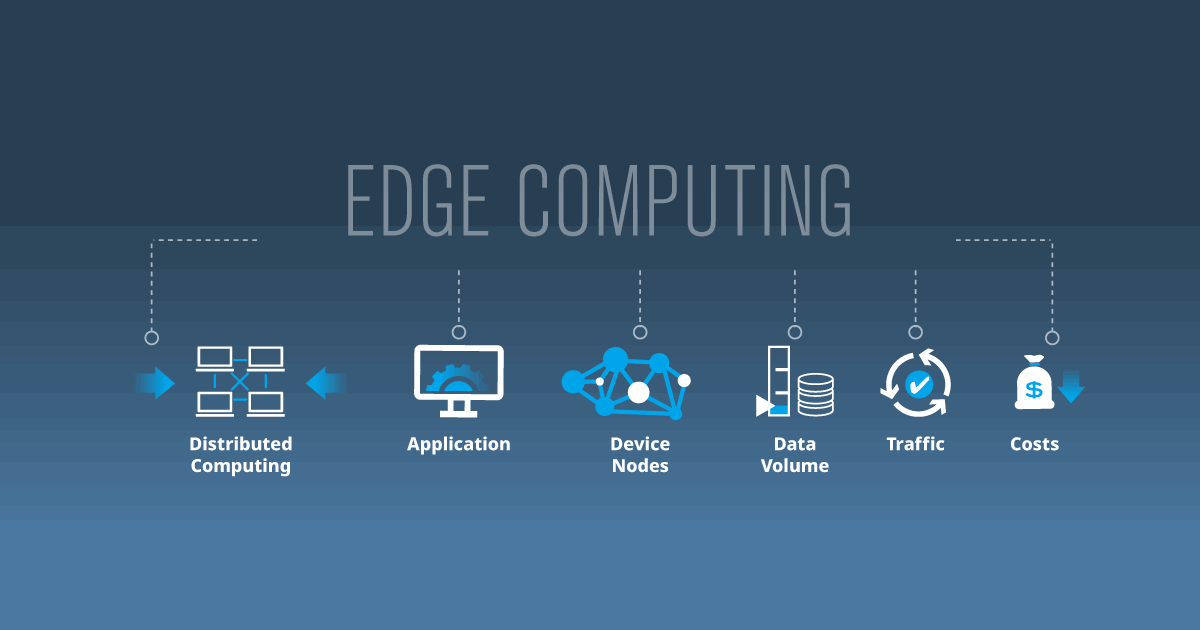

6. Edge Computing:

Currently, information about users is being gathered by millions of data points from a variety of sources, including social media, websites, emails, and web searches. Other technologies, like cloud computing, fall short in several instances as the amount of data collected grows dramatically.

Cloud computing was one of the technologies with the quickest growth till a decade ago. However, it has gained some public acceptance due to the dominance of large companies like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform.

As more businesses embraced cloud computing, they discovered the technology’s flaws. Edge computing enables organizations to bring data into a data center for processing while avoiding the latency that cloud computing imposes. It is closer to where the data processing will ultimately occur so that it can exist “on edge.” Time-sensitive data processing uses edge computing in remote areas without connectivity.

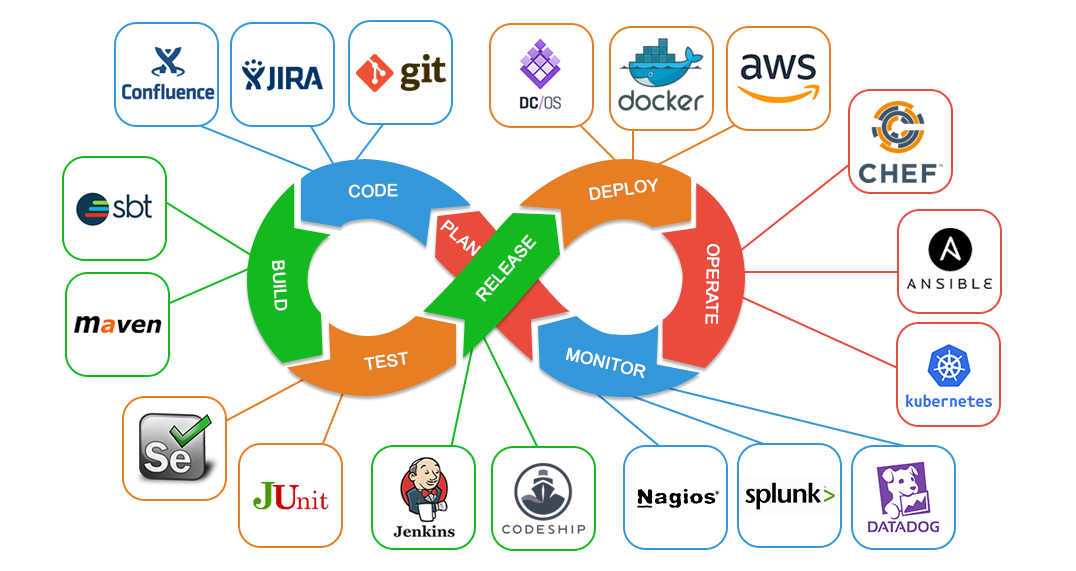

7. DevOps:

The best new technology nowadays is DevOps. There are numerous online DevOps courses available if you consider pursuing a career in this field. You can use these to stay current on the newest DevOps trends and technology. “DevOps” refers to methods for improving and automating the software development process.

DevOps has gained popularity as a method for companies to:

- Reduce software development cycle times

- Raising the bar generally

Promoting cooperation between development and operations teams is one of the critical objectives of DevOps. By doing this, businesses can provide their customers with software updates and new features more effectively. Additionally, it can help to lower the possibility of faults and enhance the caliber of software.

8. Extended Reality:

Virtual reality, augmented reality, mixed reality, and all other technologies replicating reality are collectively referred to as “extended reality.” It is an important technological trend since we all yearn to transcend the alleged absolute bounds of the planet.

This technology, which creates a reality devoid of physical existence, is well-liked by gamers, medical professionals, retail, and modeling professionals. In terms of extended reality, gaming is an essential field for well-liked occupations that don’t necessitate advanced degrees but rather an enthusiasm for online gaming. To pursue a successful career in this specialty, you can enroll in game design, animation, or editing programs. Check out the top positions in ER, VR, and AR in the meantime:

- Architect for Extended Reality

- Lead Front Engineer

- Program Developer

- Support Engineers for AR/VR

9. Quantum Computing:

The goal of quantum computing is to create computer technology based on the ideas of quantum theory. This theory explains how energy and substances behave at the atomic and subatomic scales. In other words, rather than using only 0s and 1, it computes based on the likelihood of an object’s state prior to measurement.

Regardless of the source, quantum computing can effortlessly query, analyze, and act on data. It was crucial in stopping COVID-19 and creating new vaccinations. These machines are a hundred times faster than regular machines. By 2029, it is anticipated that the quantum computing sector will generate more than $2.5 billion in revenue. To work in this subject, you must know information theory, machine learning, linear algebra, and quantum mechanics.

10. Internet of Things (IoT):

It is one of the ten years’ most promising technologies. The ability to link to the internet is present in many modern “things” and devices. A network of various linked gadgets is known as the Internet of Things. Without human intervention, devices in the network can exchange messages, gather data, and transfer it from one device to another.

There are countless real-world Internet of Things (IoT) uses, such as employing intelligent gadgets that connect to your phone to track activities, monitoring home doors from a distance, or turning on and off programs. Businesses utilize IoT for various purposes, including forecasting when a gadget breaks down so that preventative steps can be taken before it’s too late and monitoring activities in distant areas from a central hub.

Over 50 billion devices will be connected to the Internet of Things by 2030. In the next two years, it is predicted that global spending on this technology will amount to $1.1 trillion. IoT is in its infancy but will increase in the coming years. It necessitates understanding the principles of AI, machine learning, information security, and data analytics.